Google's latest project is an application called Perspective, which, as Wired reports, brings the tech company "a step closer to its goal of helping to foster troll-free discussion online, and filtering out the abusive comments that silence vulnerable voices." In other words, Google is teaching computers how to censor.

If Google's plans are not quite Orwellian enough for you, the practical results are rather more frightening. Released in February, Perspective's partners include the New York Times, the Guardian, Wikipedia and the Economist. Google, whose motto is "Do the Right Thing," is aiming its bowdlerism at public comment sections on newspaper websites, but the potential is far broader.

Perspective works by identifying the "toxicity level" of comments published online. Google states that Perspective will enable companies to "sort comments more effectively, or allow readers to more easily find relevant information." Perspective's demonstration website currently allows anyone to measure the "toxicity" of a word or phrase, according to its algorithm. What, then, constitutes a "toxic" comment?

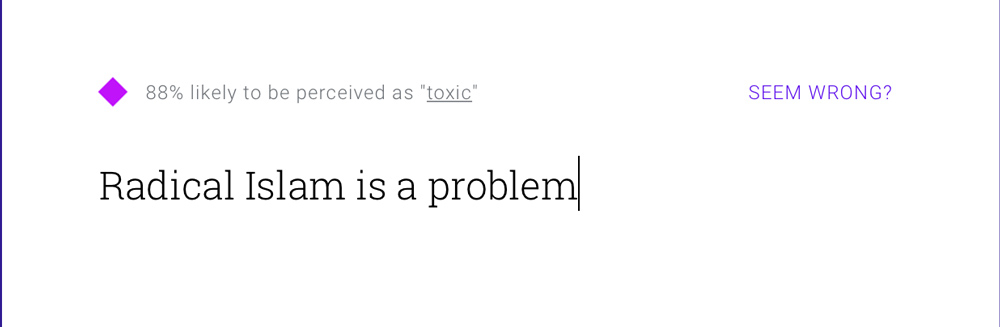

The organization with which I work, the Middle East Forum, studies Islamism. We work to tackle the threat posed by both violent and non-violent Islamism, assisted by our Muslim allies. We believe that radical Islam is the problem and moderate Islam is the solution.

Perspective does not look fondly at our work:

Google's Perspective application, which is being used by major media outlets to identify the "toxicity level" of comments published online, has much potential for abuse and widespread censorship. |

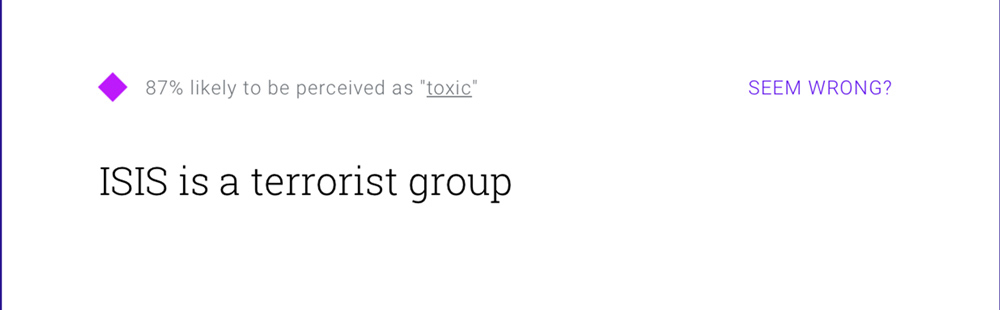

No reasonable person could claim this is hate speech. But the problem does not just extend to opinions. Even factual statements are deemed to have a high rate of "toxicity." Google considers the statement "ISIS is a terrorist group" to have an 87% chance of being "perceived as toxic."

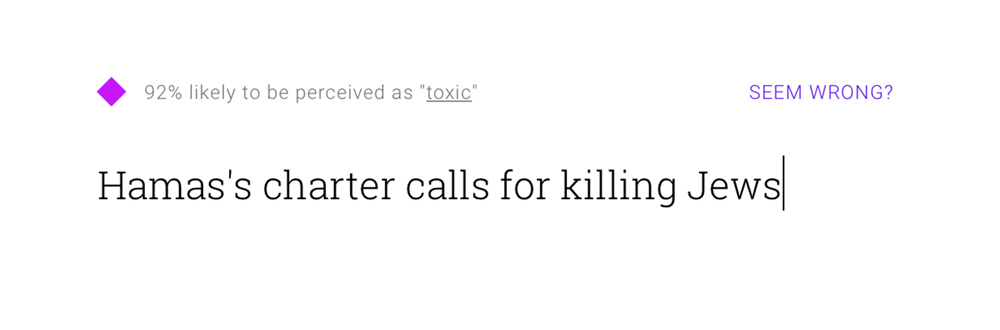

Or 92% "toxicity" for stating the publicly-declared objective of the terrorist group, Hamas:

Google is quick to remind us that we may disagree with the result. It explains that, "It's still early days and we will get a lot of things wrong." The Perspective website even offers a "Seem Wrong?" button to provide feedback.

These disclaimers, however, are very much beside the point. If it is ever "toxic" to deem ISIS a terrorist organization, then -- regardless of whether that figure is the result of human bias or an under-developed algorithm -- the potential for abuse, and for widespread censorship, will always exist.

The problem lies with the very concept of the idea. Why does Silicon Valley believe it should decide what is valid speech and what is not?

Google is not the only technology company enamored with censorship. In June, Facebook announced its own plans to use artificial intelligence to identify and remove "terrorist content." These measures can be easily circumvented by actual terrorists, and how long will it be before that same artificial intelligence is used to remove content that Facebook staff find to be politically objectionable?

In fact, in May 2016, the "news curators" at Facebook revealed that they were ordered to "suppress news stories of interest to conservative readers from the social network's influential 'trending' news section." And in December 2016, Facebook announced it was working to "address the issue of fake news and hoaxes" published by its users. The Washington Free Beacon later revealed that Facebook was working with a group named Media Matters on this issue. In one of its own pitches to donors, Media Matters declares its dedication to fighting "serial misinformers and right-wing propagandists." The leaked Media Matters document states it is working to ensure that "Internet and social media platforms, like Google and Facebook, will no longer uncritically and without consequence host and enrich fake news sites and propagandists." Media Matters also claims to be working with Google.

Conservative news, it seems, is considered fake news. Liberals should oppose this dogma before their own news comes under attack. Again, the most serious problem with attempting to eliminate hate speech, fake news or terrorist content by censorship is not about the efficacy of the censorship; it is the very premise that is dangerous.

Under the guidance of faulty algorithms or prejudiced Silicon Valley programmers, when the New York Times starts to delete or automatically hide comments that criticize extremist clerics, or Facebook designates articles by anti-Islamist activists as "fake news," Islamists will prosper and moderate Muslims will suffer.

Silicon Valley has, in fact, already proven itself incapable of supporting moderate Islam. Since 2008, the Silicon Valley Community Foundation (SVCF) has granted $330,524 to two Islamist organizations, the Council on American-Islamic Relations (CAIR) and Islamic Relief. Both these groups are designated terrorist organizations in the United Arab Emirates. SVCF is America's largest community foundation, with assets of over $8 billion. Its corporate partners include some of the country's biggest tech companies -- its largest donation was $1.5 billion from Facebook founder Mark Zuckerberg. The SVCF is Silicon Valley.

In countries such as China, Silicon Valley has previously collaborated with the censors. At the very least, it did so because the laws of China forced it to comply. In the European Union, where freedom of expression is superseded by "the reputation and rights of others" and the criminalization of "hate speech" (even where there is no incitement to violence), Google was ordered to delete certain data from search results when a member of the public requests it, under Europe's "right to be forgotten" rules. Rightly, Google opposed the ruling, albeit unsuccessfully.

But in the United States, where freedom of speech enjoys protections found nowhere else in the world, Google and Facebook have not been forced to introduce censorship tools. They are not at the whim of paranoid despots or unthinking bureaucrats. Instead, Silicon Valley has volunteered to censor, and it has enlisted the help of politically partisan organizations to do so.

This kind of behavior sends a message. Earlier this year, Facebook agreed to send a team of staff to Pakistan, after the government asked both Facebook and Twitter to help put a stop to "blasphemous content" being published on the social media websites. In Pakistan, blasphemy is punishable by death.

Google, Facebook and the rest of Silicon Valley are private companies. They can do with their data mostly whatever they want. The world's reliance on their near-monopoly over the exchange of information and the provision of services on the internet, however, means that mass-censorship is the inevitable corollary of technology companies' efforts to regulate news and opinion.

At a time when Americans have little faith in the mass media, Silicon Valley is now veering in a direction that will evoke similar ire. If Americans did not trust the mass media before, what will they think once that same media is working with technology companies not just to report information Silicon Valley prefers, but to censor information it dislikes?

Samuel Westrop is the Director of Islamist Watch, a project of the Middle East Forum.